Political Disinformation Campaign on YouTube

Uncovering a political disinformation campaign using natural language processing and AI

The New York Times reported on the Internet Research Agency (IRA), a firm believed to be funded by the Russian state government, hiring "trolls" to post divisive content to social media sites like Facebook, Twitter, YouTube, Reddit, and Tumblr around American political issues to sow political discord. These efforts were also detailed in the bipartisan report by the Senate Intelligence Committee. In 2018, Twitter published an extensive dataset of content posted by and associated with the IRA's activity on the platform allowing researchers to study their behavior (Linvill and Warren, Im et. al, Cleary and Symantec).

While often Twitter and Facebook are the focus of the media with regard to these types of troll campaigns, in this post we describe how our tools helped us find a new, sophisticated YouTube political troll campaign with 8M+ views one year out from the 2020 U.S. presidential election. Our tools aim to help detect similar campaigns by networks of bot, troll, and propaganda accounts for OSINT (open source intelligence) purposes.

Plasticity is currently contracted to work with the U.S. Air Force to conduct research and build solutions for military intelligence analysts. The software described in the post is connected to that effort, but the data, analysis, and any conclusions reached are of our own and do not represent the official views of the U.S. Air Force, the Department of Defense, or the U.S. federal government.

Upon discovering the disinformation campaign described in this post, we performed responsible disclosure to Google / YouTube to allow them to review and suspend the accounts in accordance with their policies and put in counter-measures to stop the campaign.

Plasticity's work was covered by CNN. CNN broke the story on our report online and on their TV network.

On October 30, 2017, YouTube released a statement on their findings during the 2016 election:

"We found 18 channels likely associated with this campaign that made videos publicly available, in English and with content that appeared to be political...there were 1108 such videos uploaded, representing 43 hours of content...These videos generally had very low view counts; only around 3 percent had more than 5,000 views."

We have found evidence of a more significant campaign with accounts linked to the Russia and Ukraine region, much larger than the one previously found, driving higher engagement with users and thriving on YouTube. We show some of the data our intelligence tools collected below.

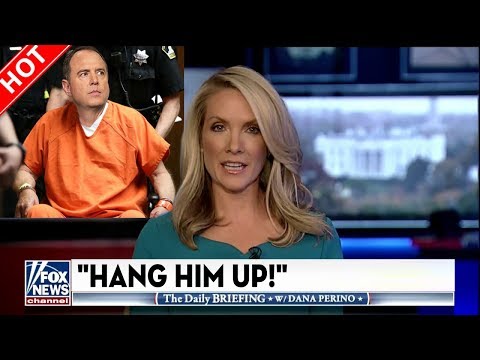

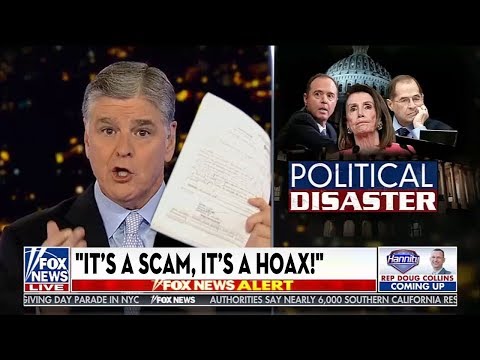

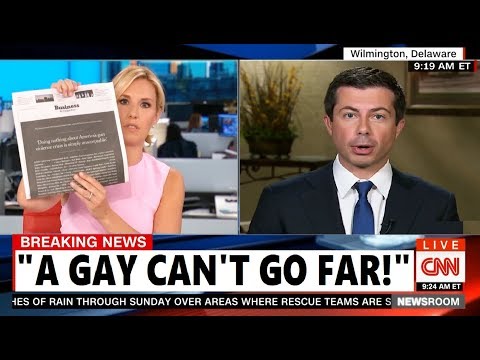

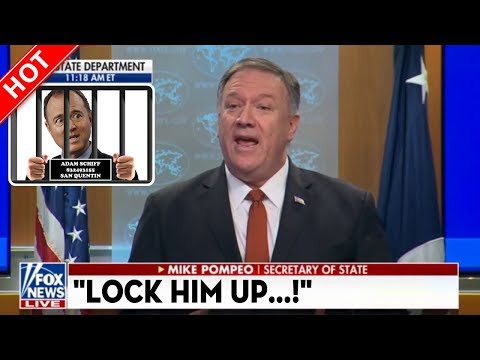

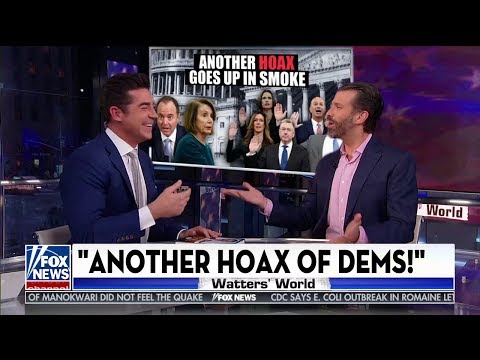

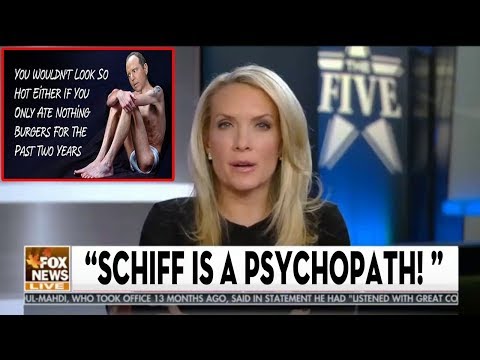

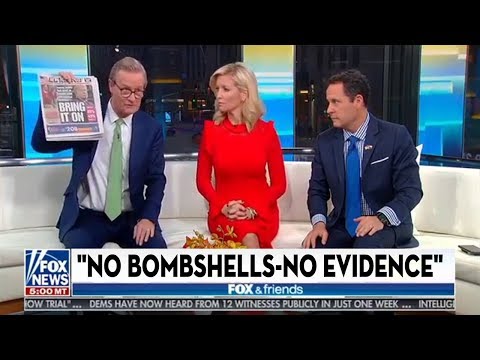

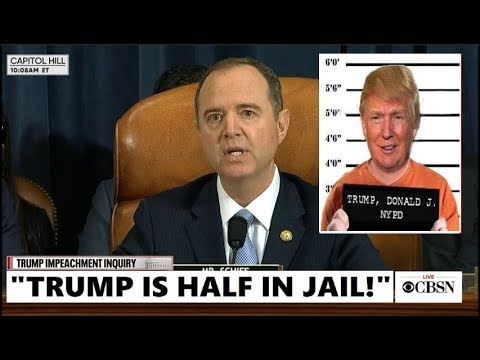

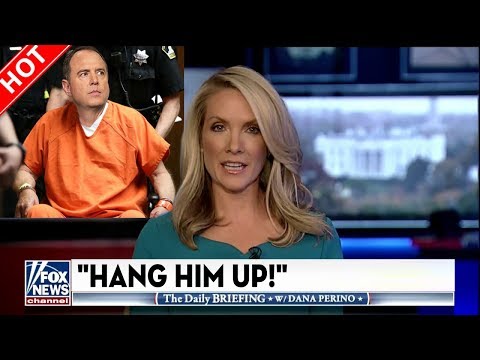

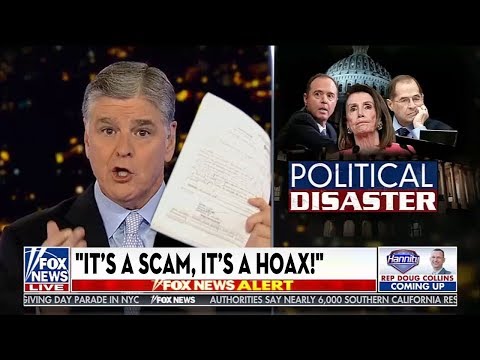

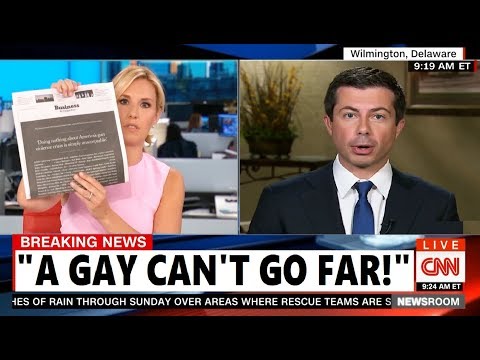

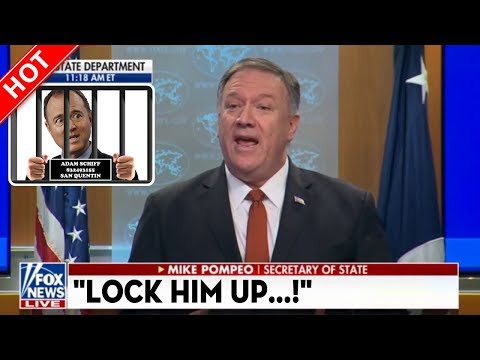

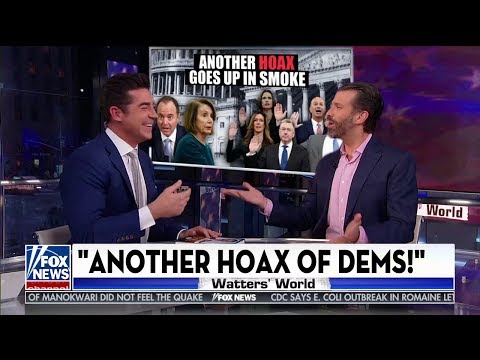

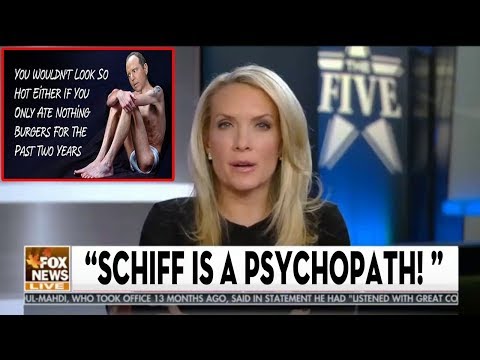

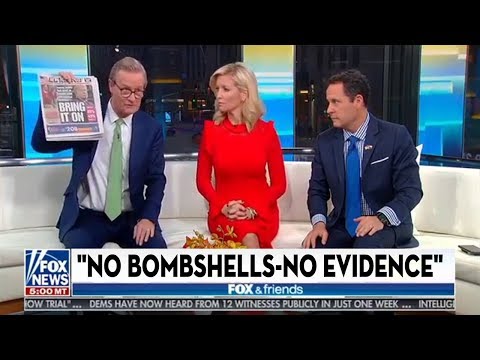

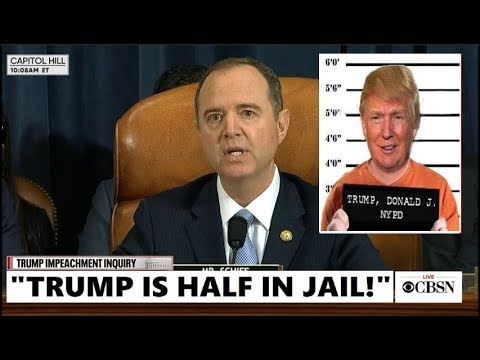

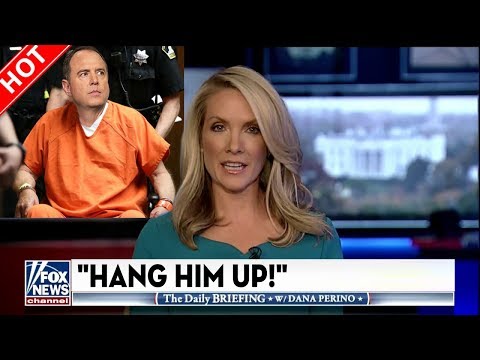

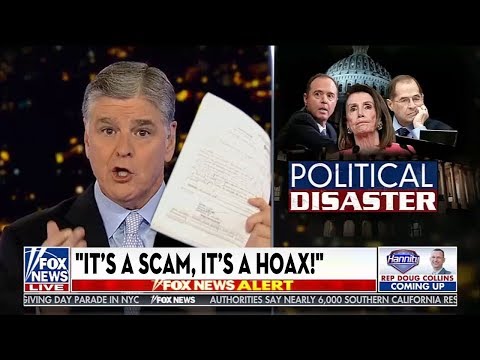

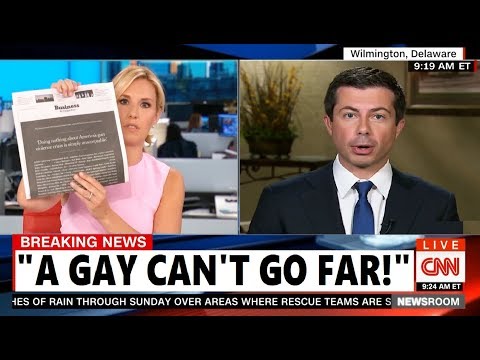

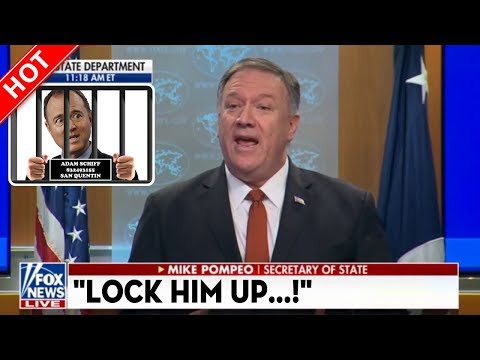

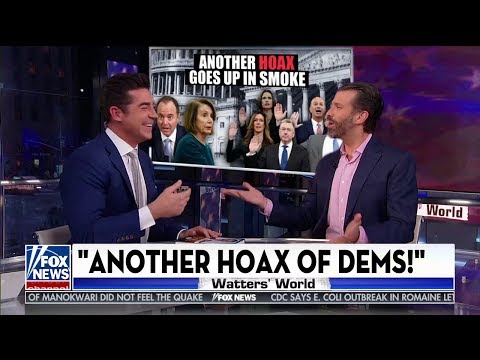

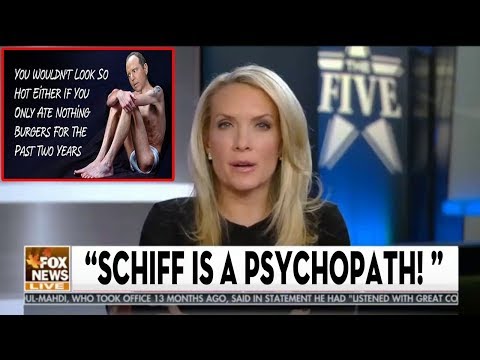

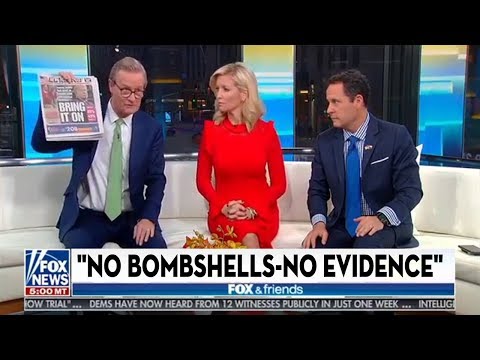

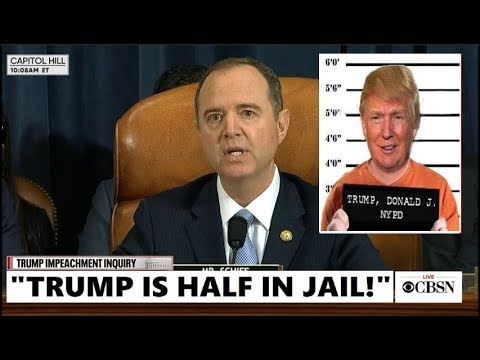

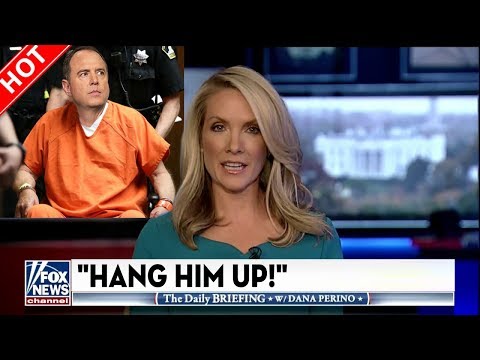

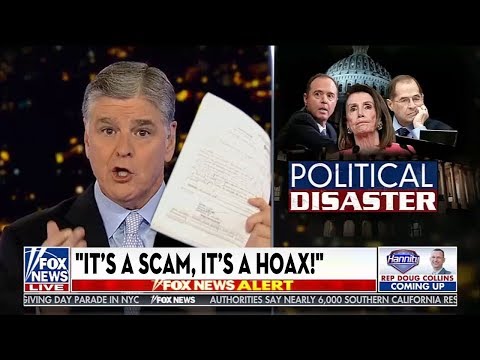

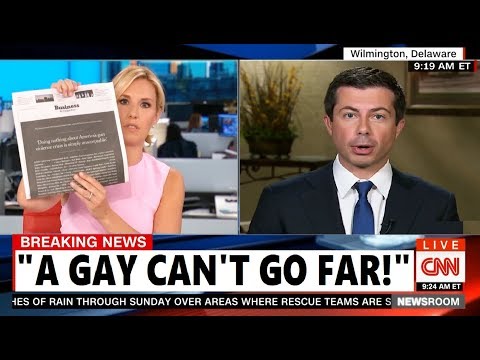

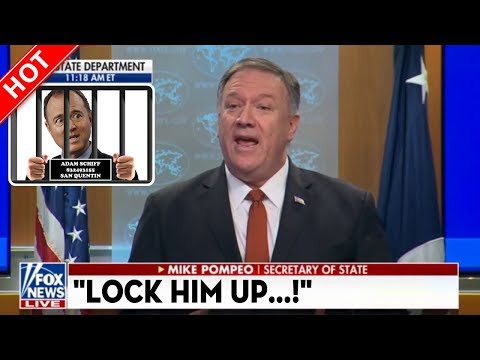

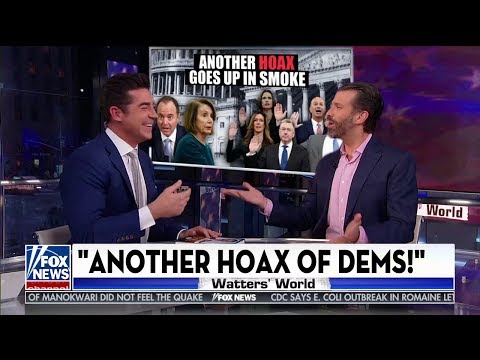

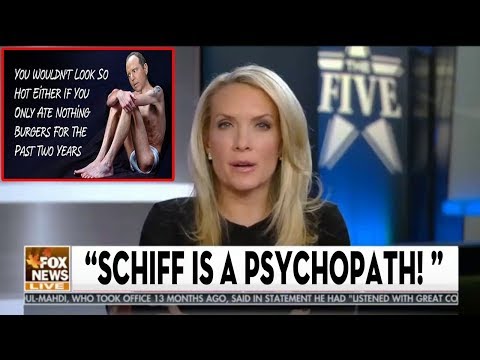

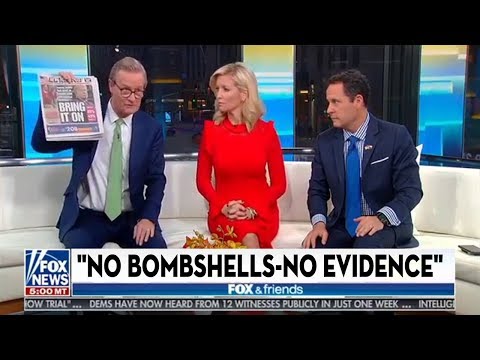

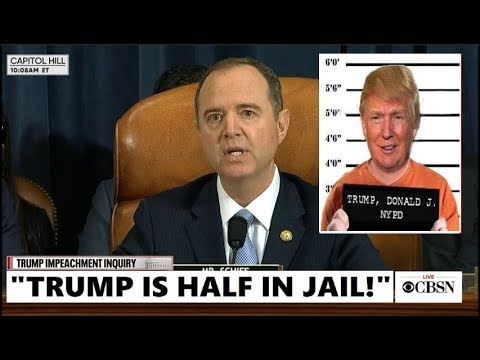

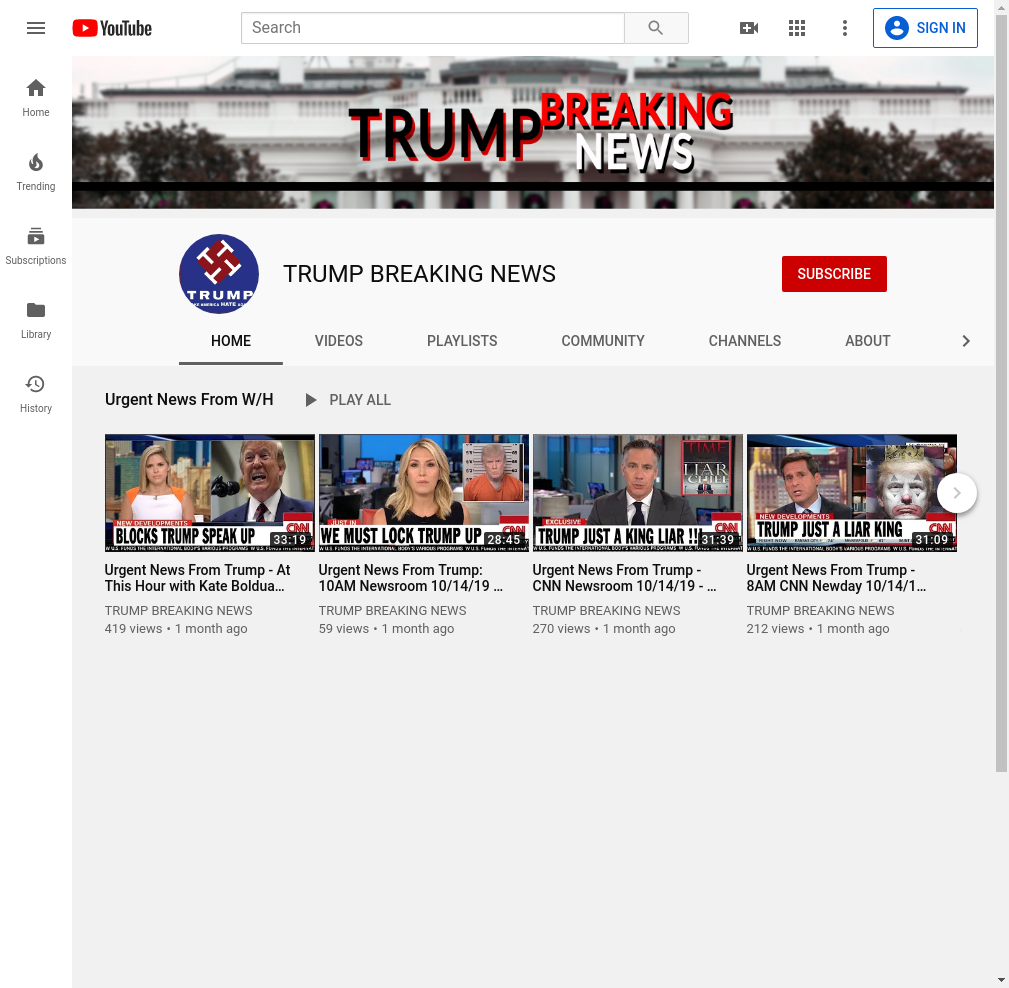

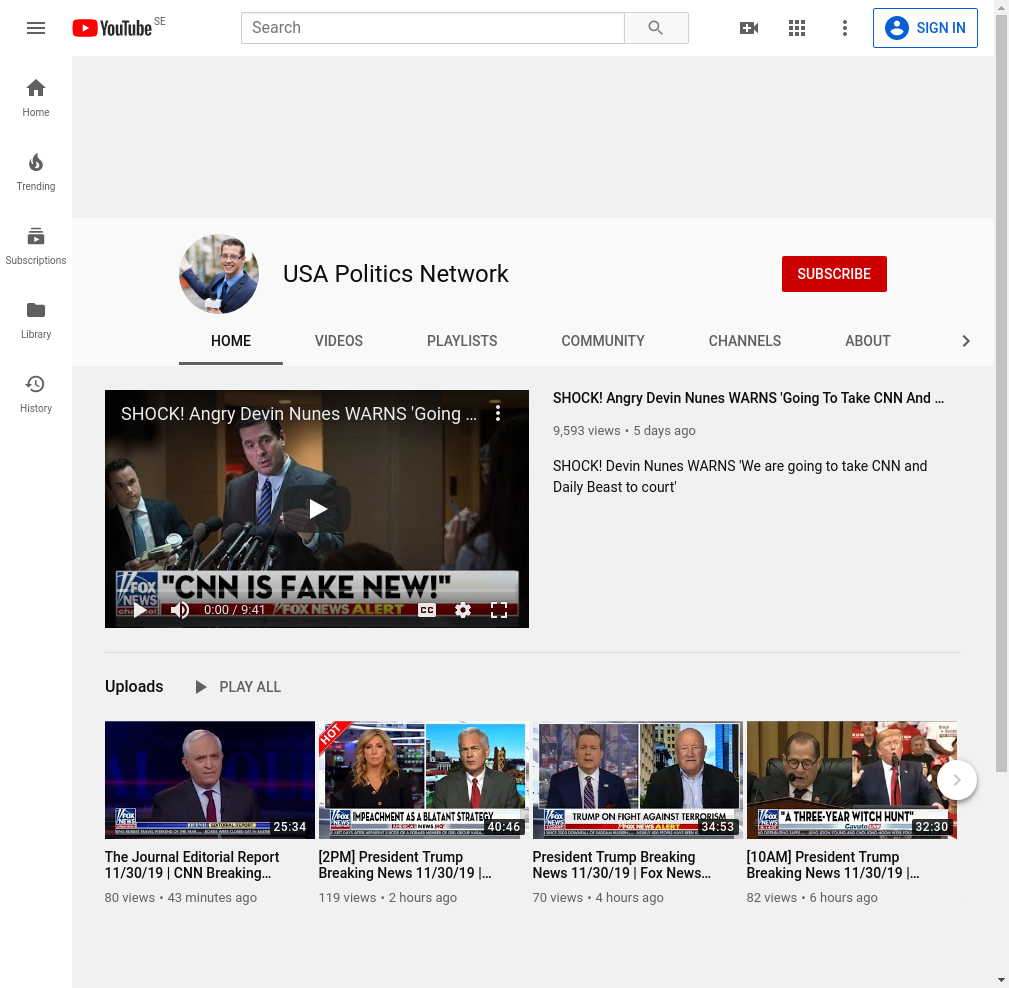

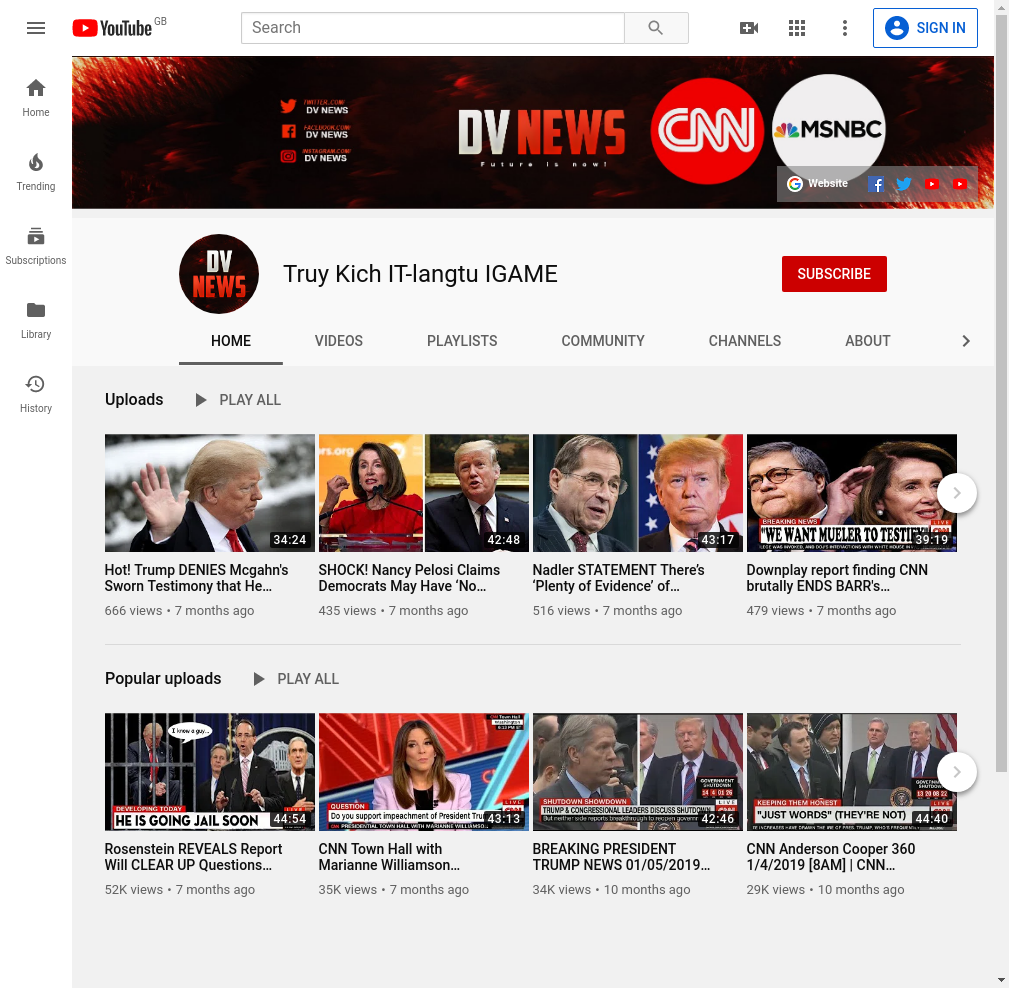

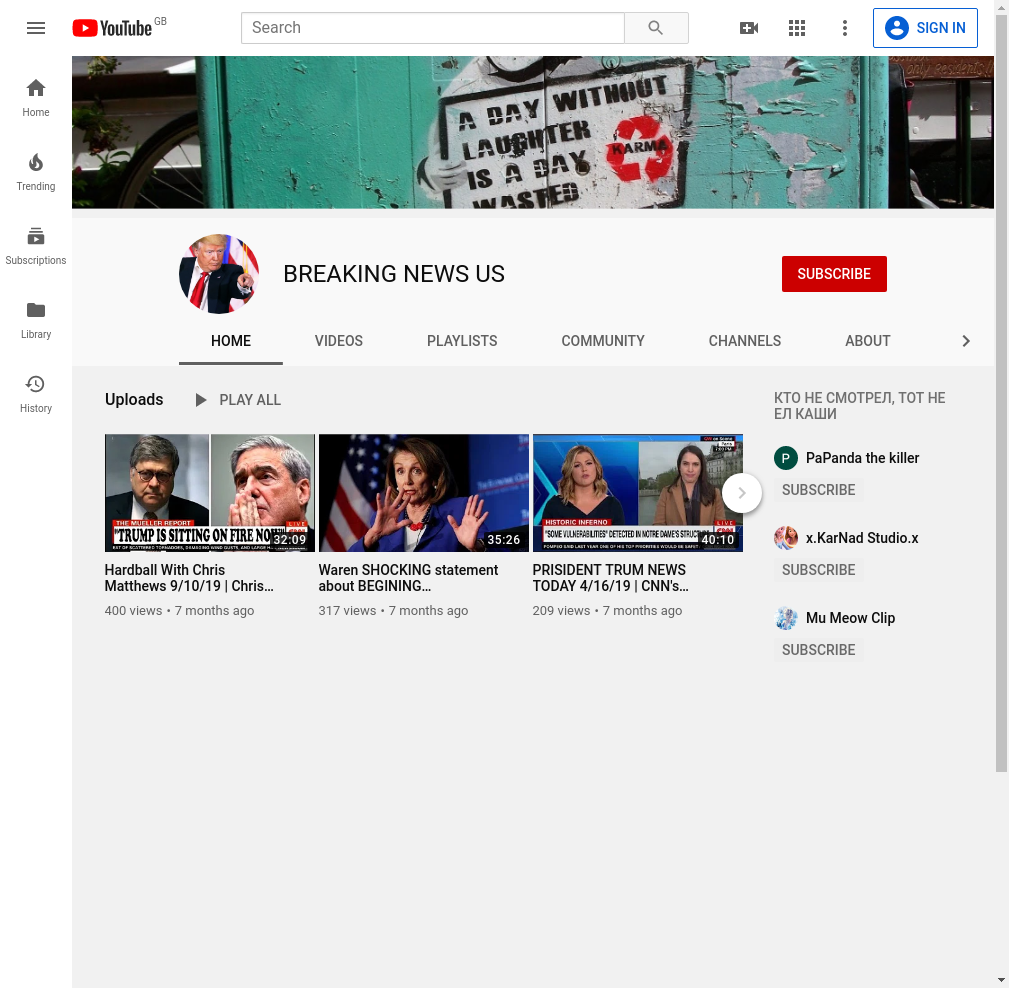

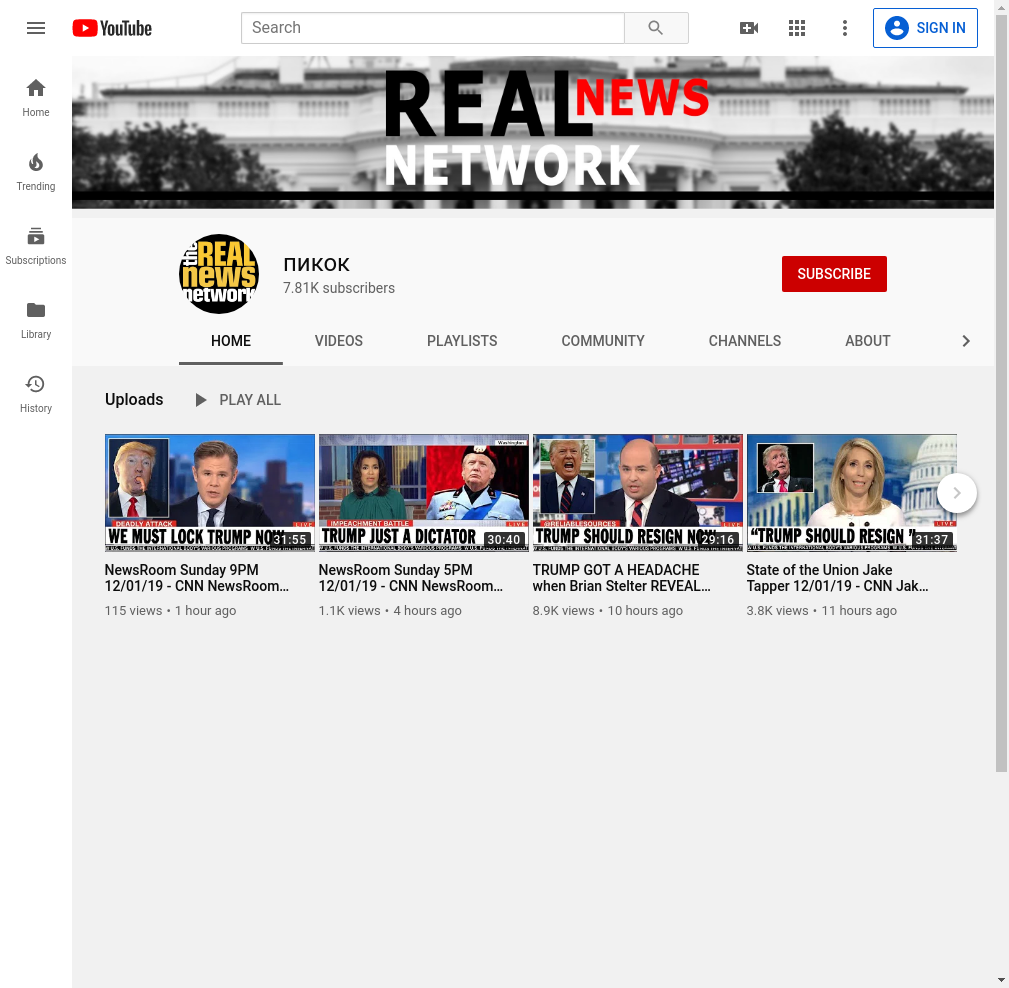

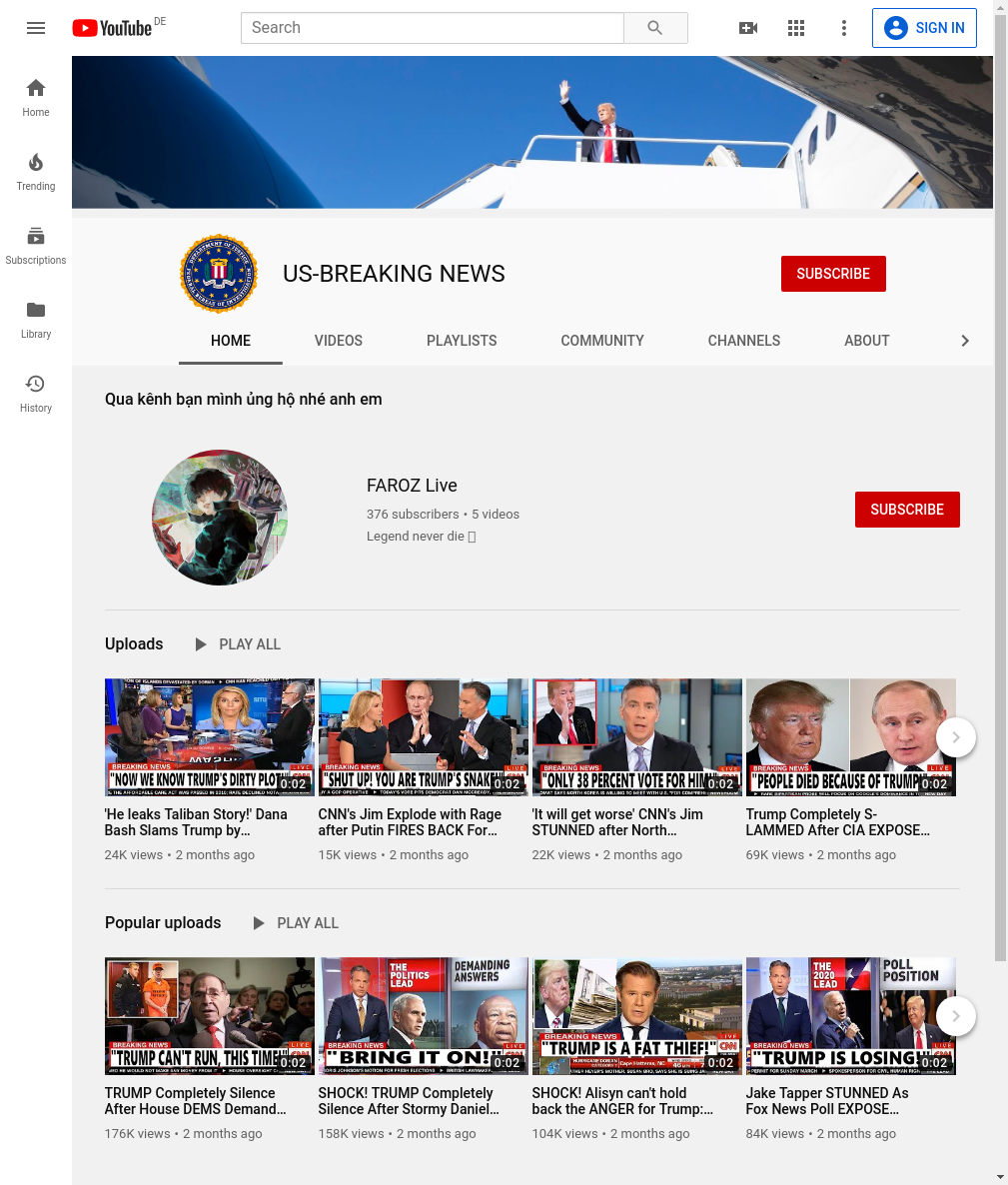

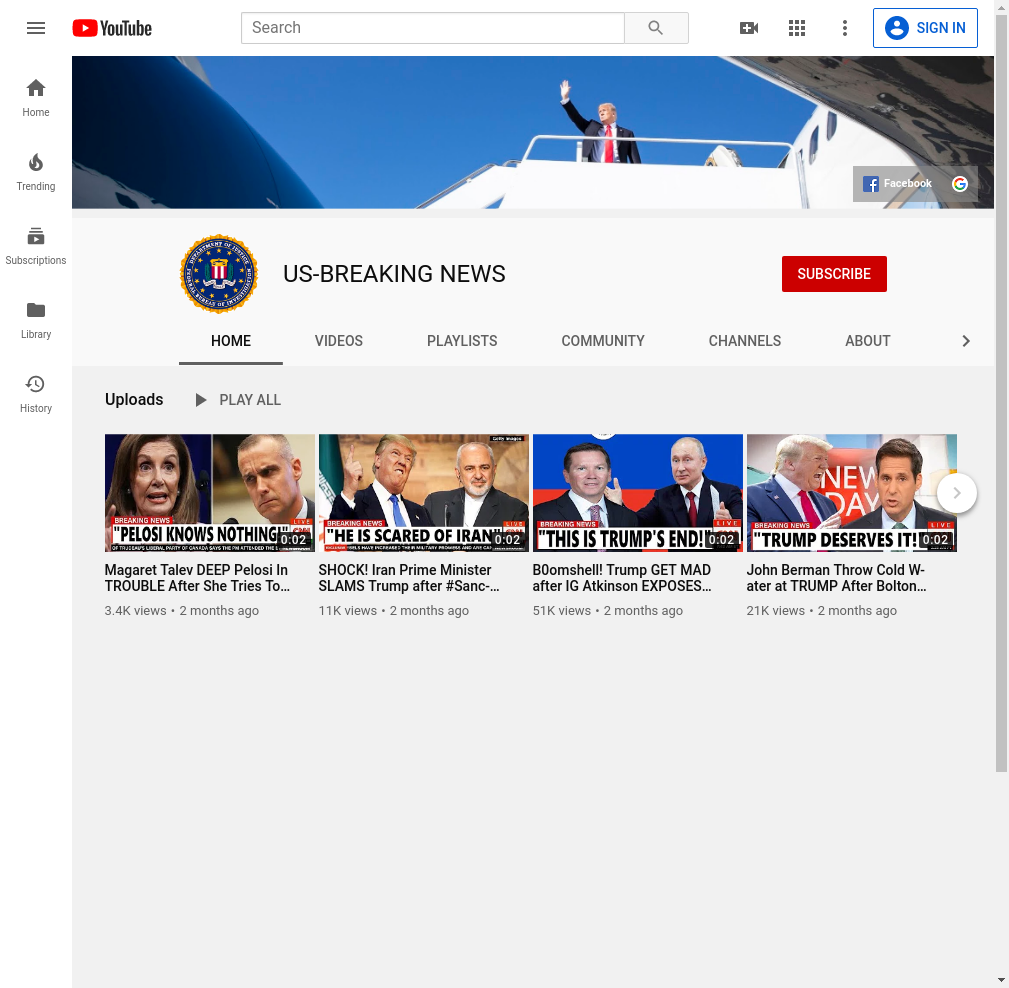

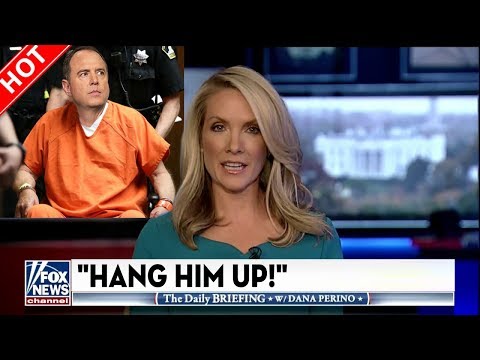

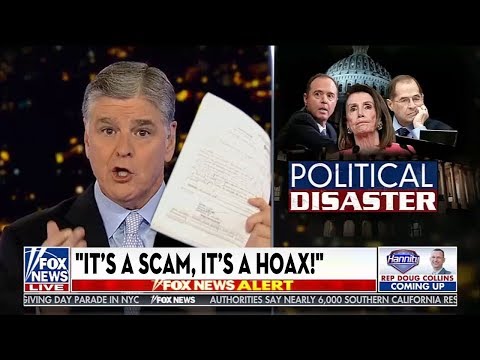

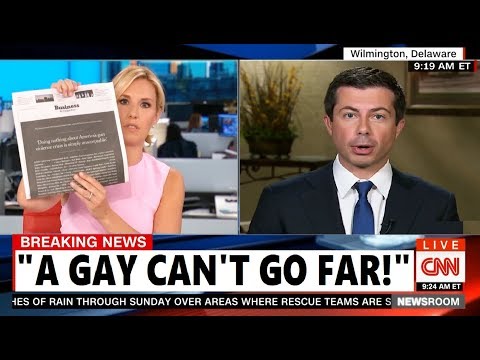

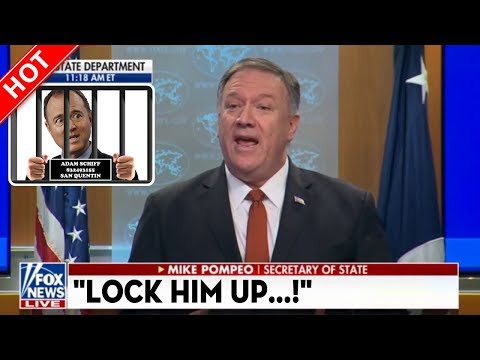

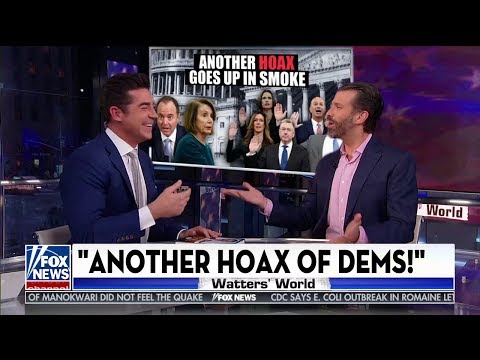

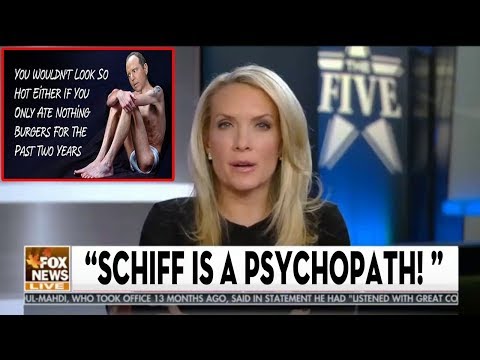

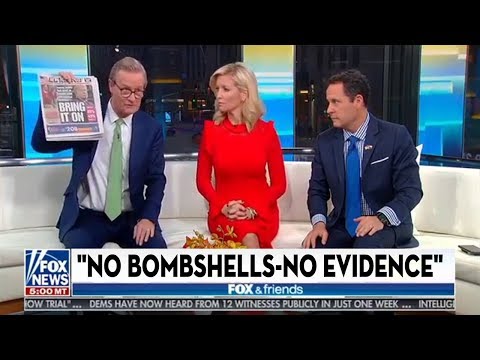

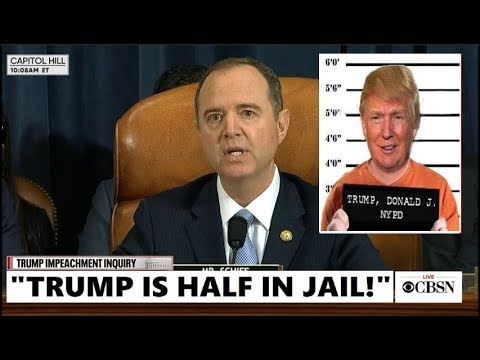

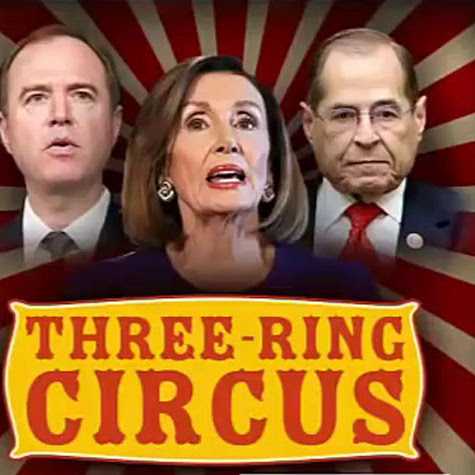

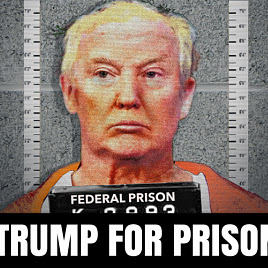

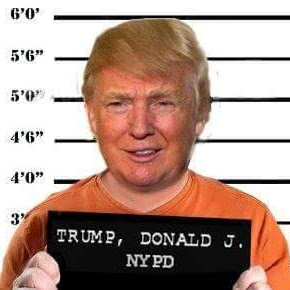

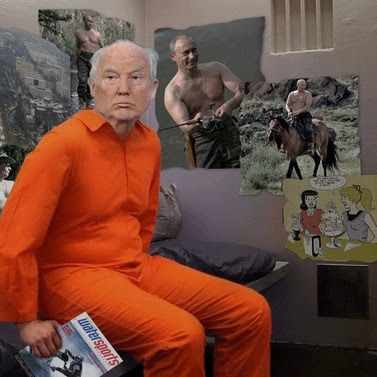

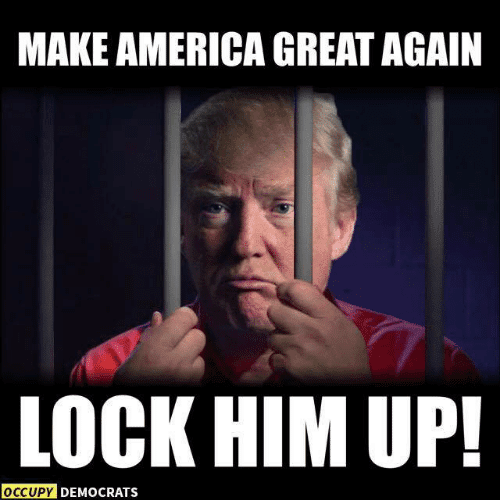

Video thumbnails are doctored to show Democratic and Republican leadership in outrageous and violent scenarios, such as being locked in a jail cell or hanged by a noose. The headlines are also edited to say things like "HANG HIM UP!", which a reputable news organization would never do.

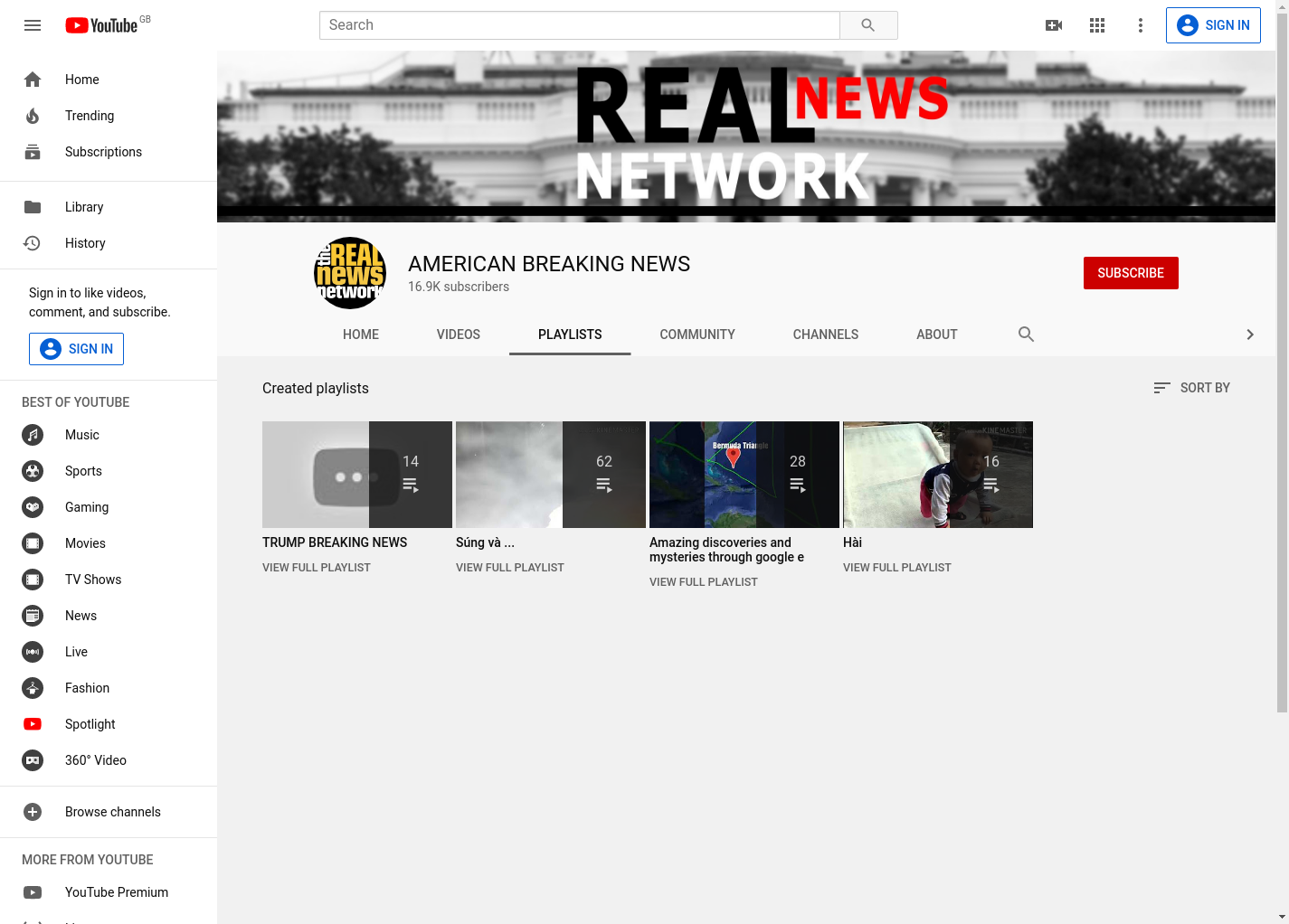

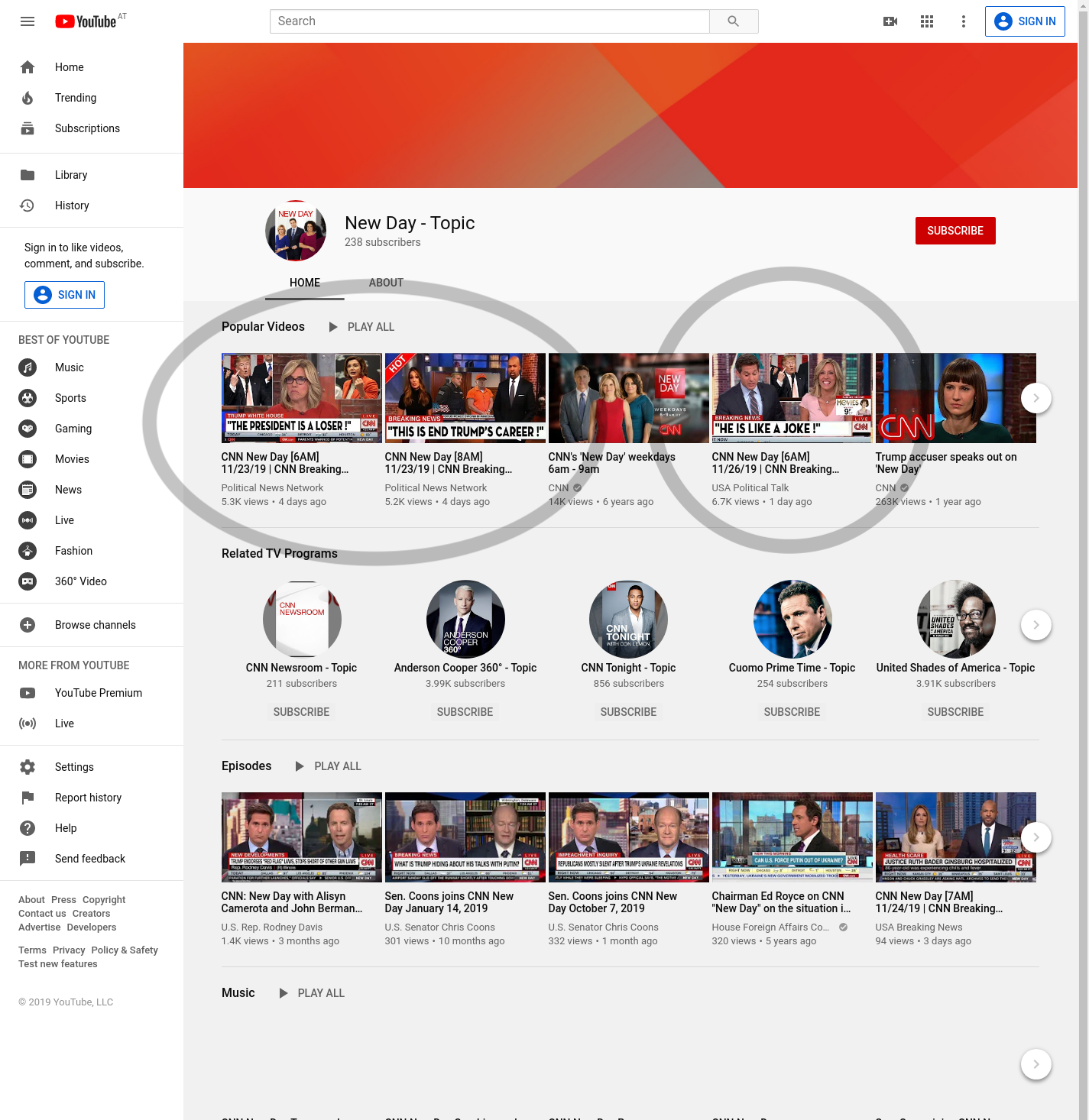

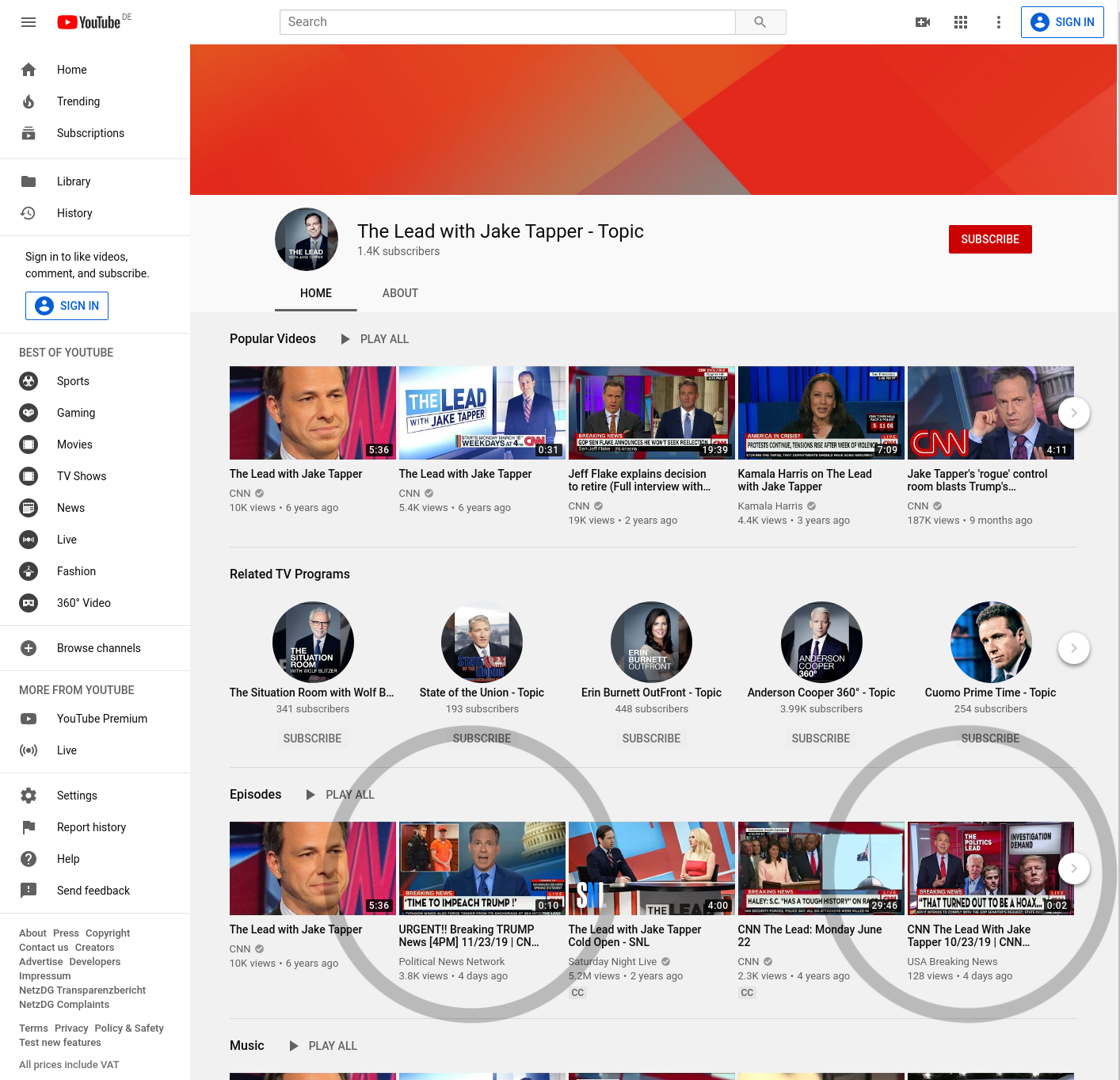

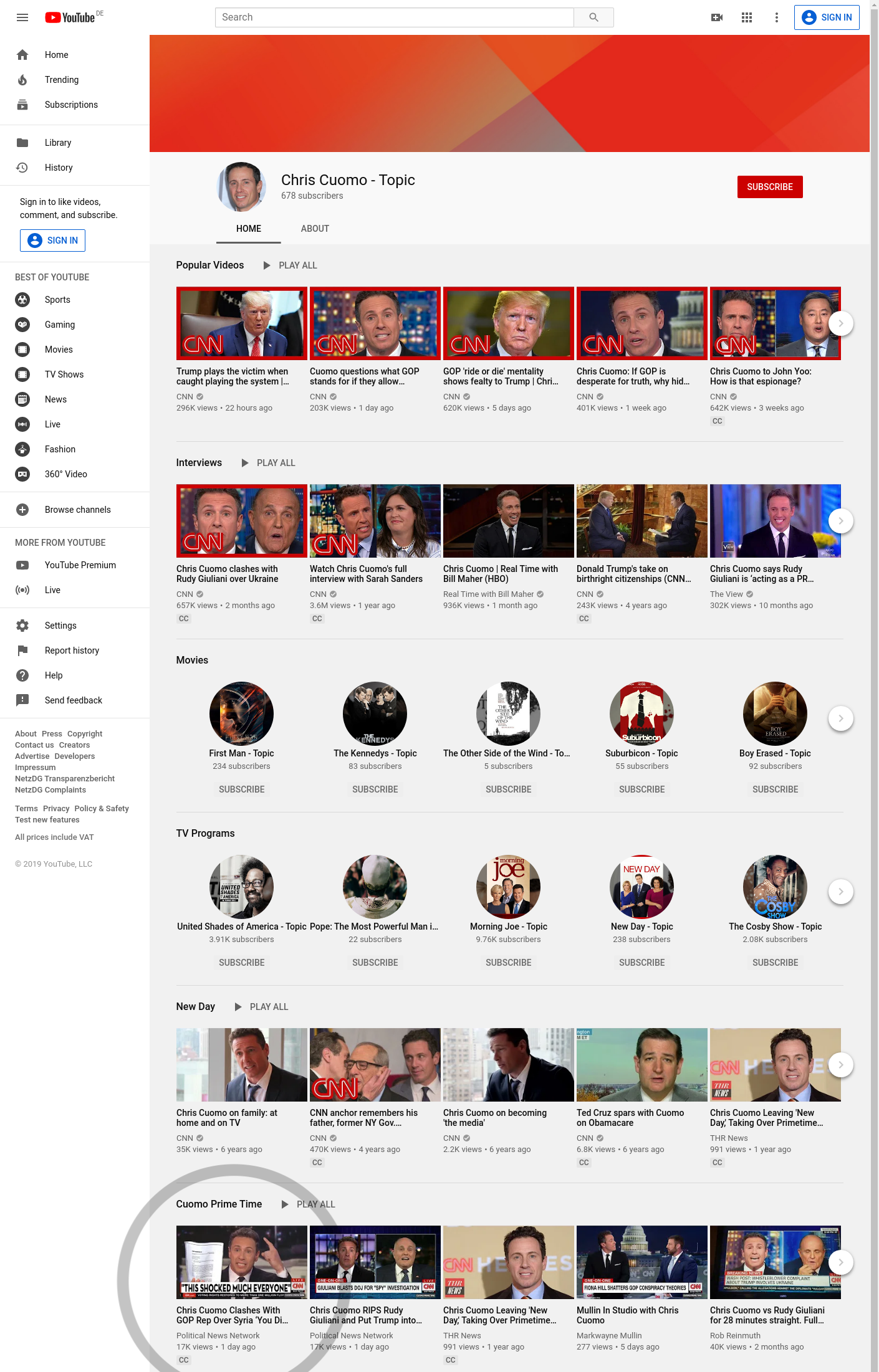

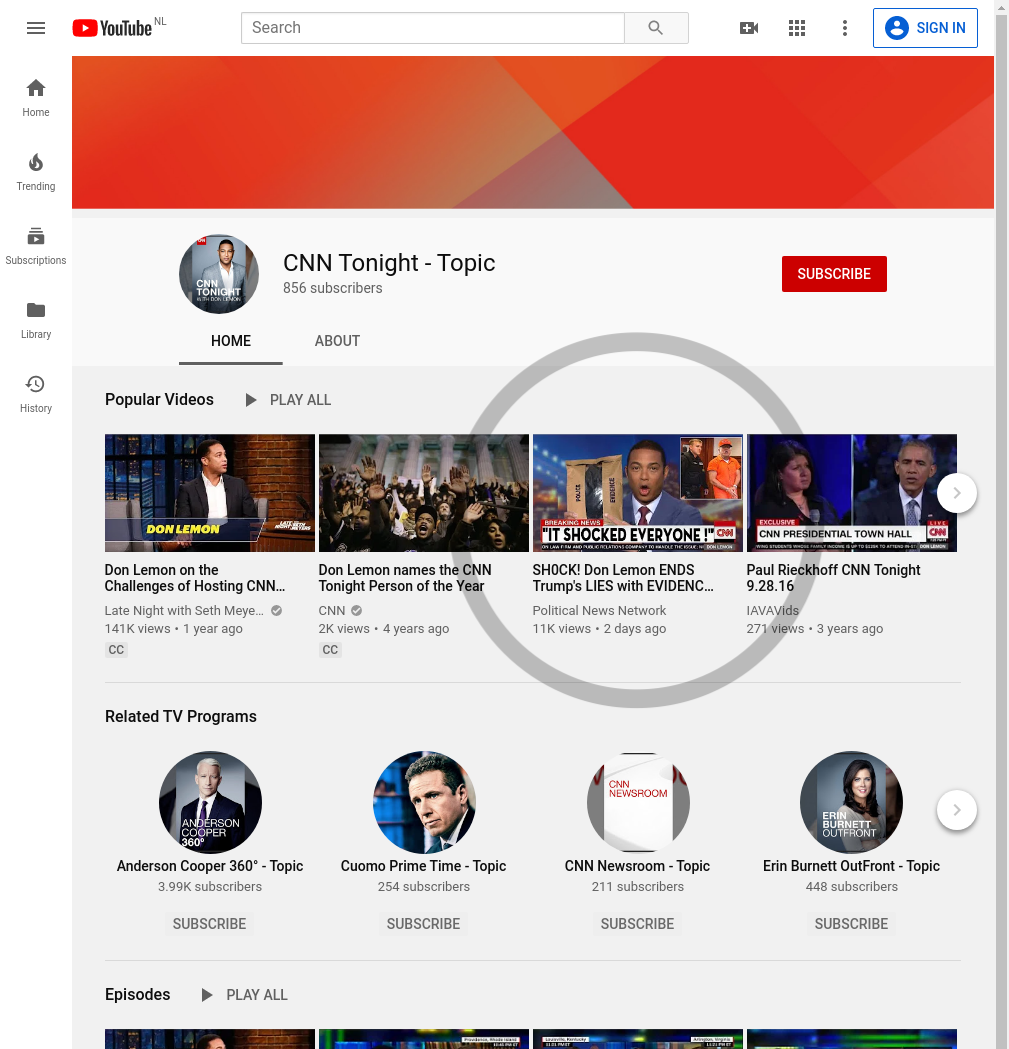

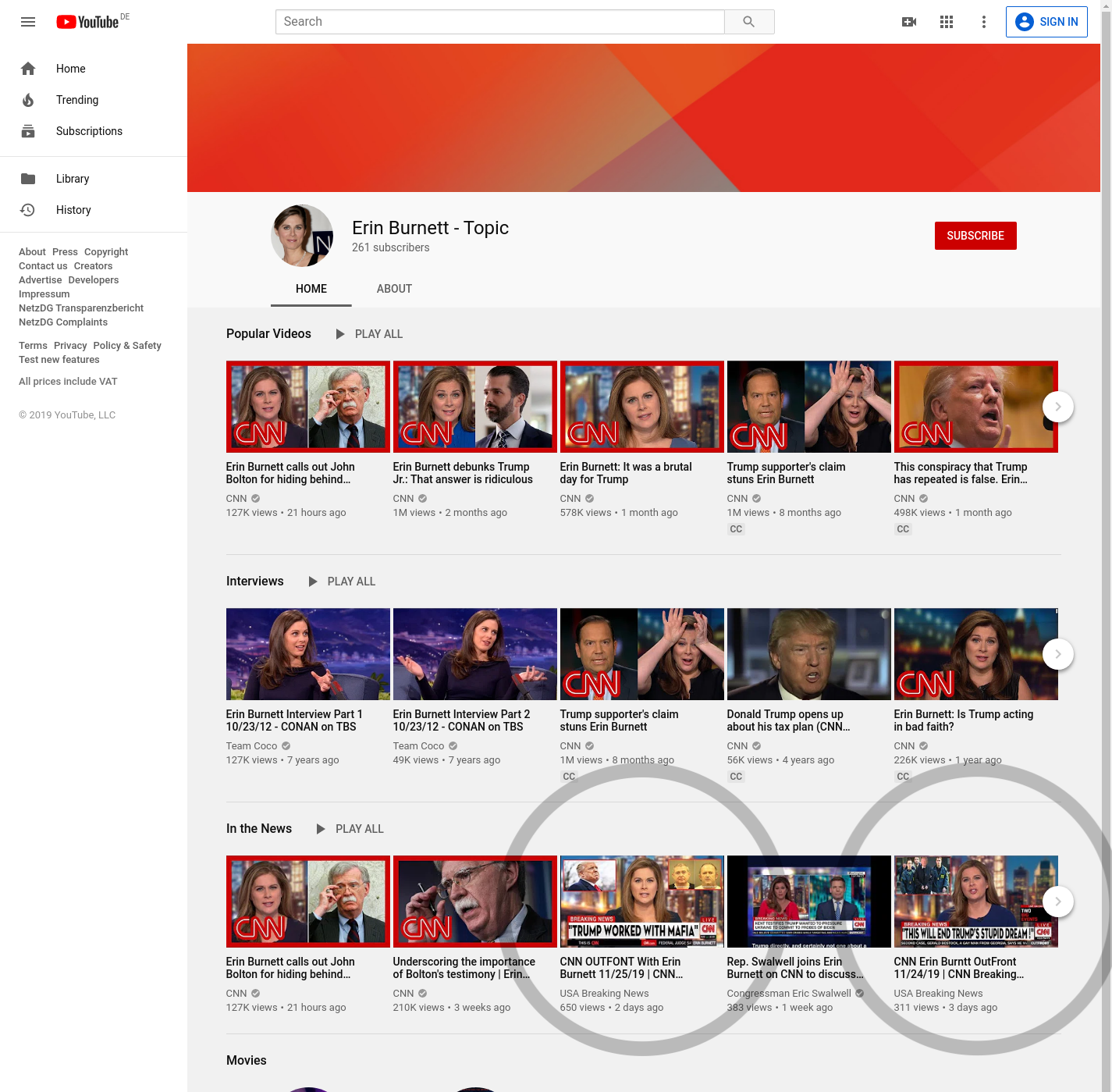

YouTube Auto-generated Topic Channels are channels that are automatically created by YouTube's algorithms to collect videos on certain topics (TV series, people, events, etc.).

For example, even though CNN posts videos of all of their anchors to a single YouTube channel, YouTube automatically creates separate, official-looking channels for each anchor's show like The Lead with Jake Tapper that aggregates videos of Jake Tapper.

According to YouTube, they help "boost your channel’s search and discovery potential on YouTube." We found videos from this campaign promoted on some of these YouTube topic channels. Anyone who subscribes to these YouTube topic channels will be pushed content from this campaign, effectively increasing their audience and reach.

While this content might appear to be obviously edited to savvy viewers, there are still a proportion of users commenting on these videos that do not question the content's source and even believe they are being uploaded from sources like CNN:

"after I posted my comments I noticed the channel was not CNN and am now going to unsubscribe to the channel in question. Thanks for your eagle eye and back up. Much appreciated. It gives CNN a bad reputation. Wonder how to stop it?"

While the content posted by this campaign is certainly troubling and the outrageous tone they strike seems to have malicious intent, due to the anonymous nature of the internet it is difficult to make a definitive statement about the actors behind this campaign without access to the private metadata behind these accounts.

It seems to be a "professional" operation given the number of hacked accounts they have access to, the amount of human effort involved in the campaign, the likely automated tools developed to help create the doctored images, and the frequency at which content is uploaded (every hour or few hours). Moreover, most channels don't seem to be monetized or have financial incentive to be driving views to their channels.

Regardless of the source, the content should be taken down as it is disinformation, and the campaign should be blocked from creating further accounts.

† These numbers represent less than 30 days of data collection. The actors behind this campaign delete old content and accounts frequently to presumably avoid detection. It is very likely the numbers for the totality of the campaign over many months are significantly larger.

†† 34% of these channels were linked to the Russia or Ukraine region.

††† We define "troll commenters" by analyzing YouTube profiles that comment on multiple channels linked to the campaign.

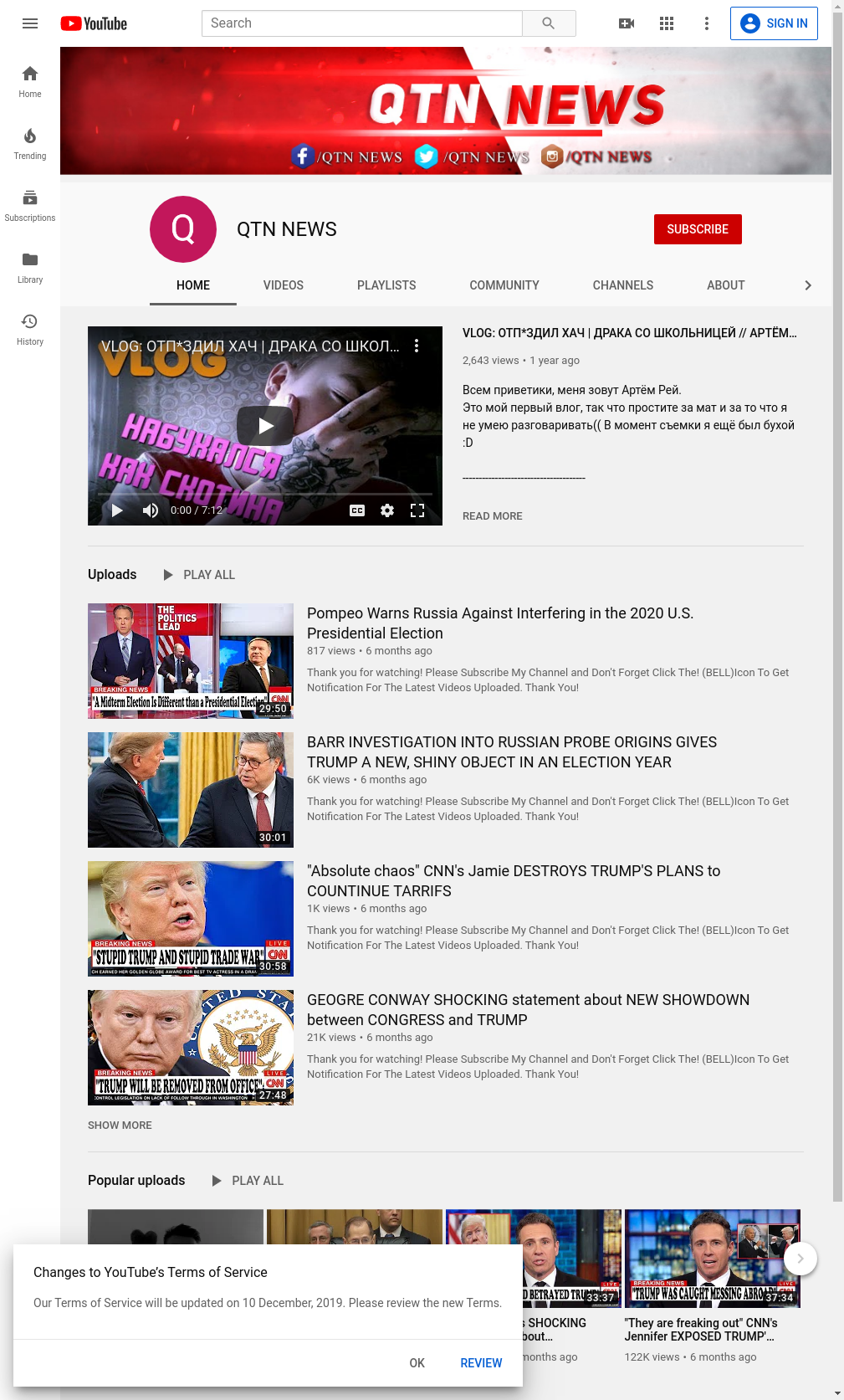

Using features "rewarded" by the algorithm like YouTube Premieres to gain a boost in viewership. Using keyword stuffing for SEO.

Use of outright doctored images and fake or sensationalist headlines to attract views.

Network of fake user profiles that comment across different videos to stoke outrage.

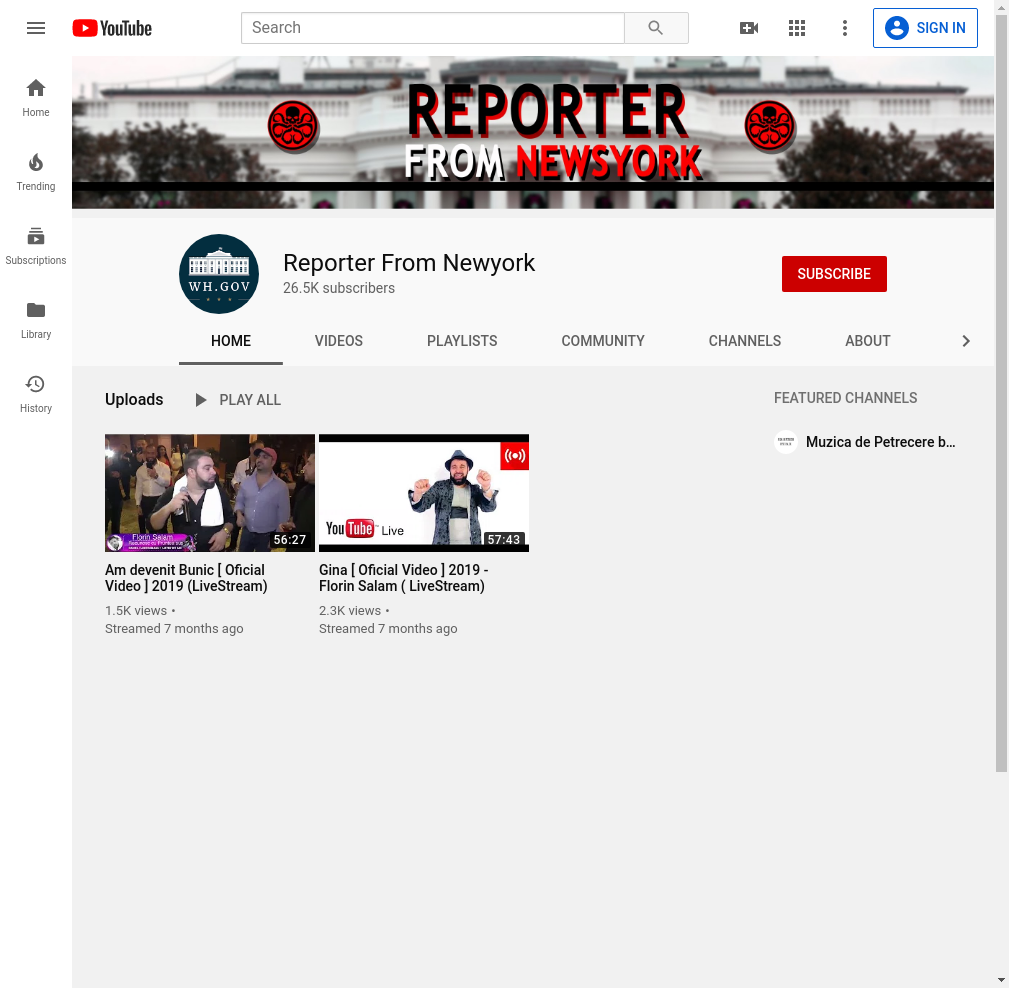

Using hacked video gamer / vlogger YouTube accounts to boost viewership and gain trust with the YouTube algorithm.

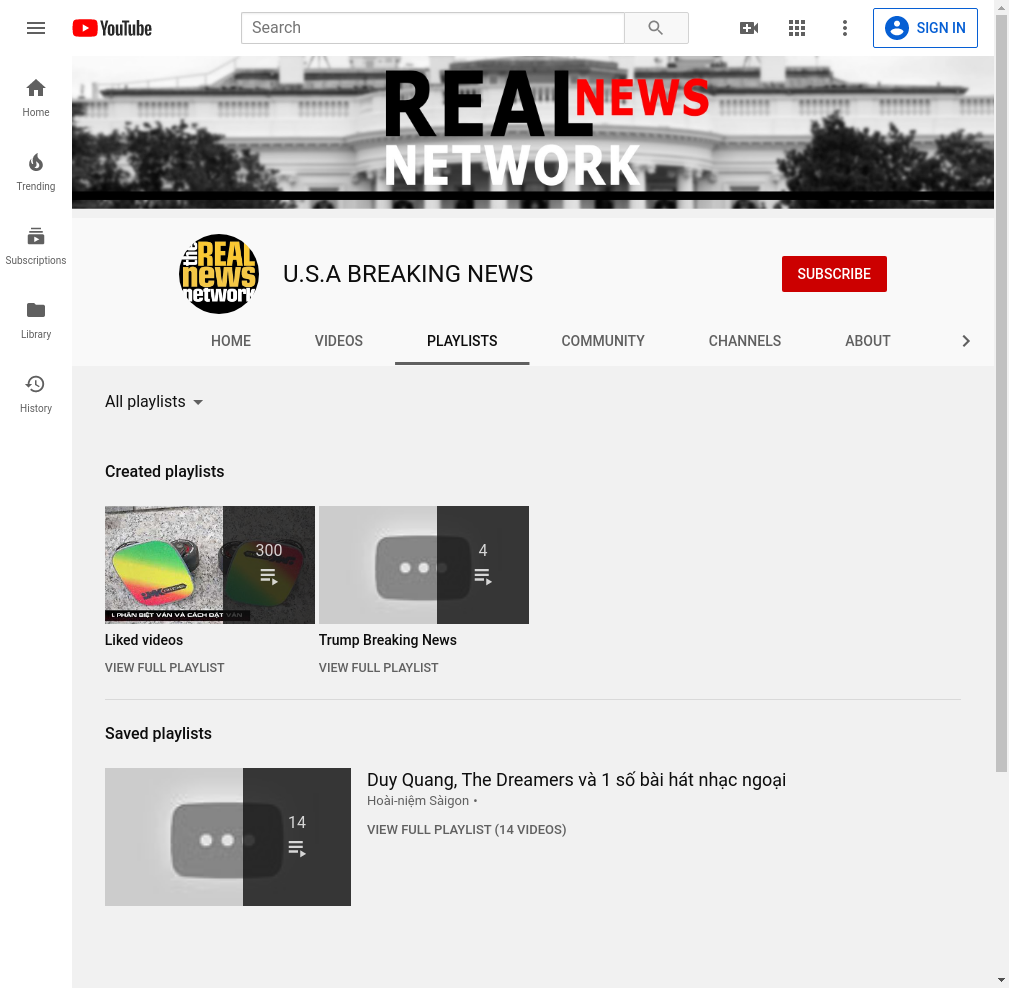

Impersonating media organizations like CNN and Fox News to deceive viewers.

Deleting old content and uploading new content to different channels periodically to avoid detection and flagging.

Our OSINT toolkit software operates by letting an intelligence analyst give examples of content they would like to further investigate. The toolkit then searches PAI (Publicly Available Information) sources like YouTube or other social media sites to find associated or similar content.

Provide a list of seed content that is of interest such as keywords, topics, phrases, or example accounts that post similar content.

Using natural language processing, the content is fingerprinted so that information sources can be searched accurately and effectively.

Efficiently scan millions of pieces of existing and new content being posted in real time onto public information sources, surfacing content that matches the fingerprint.

New content found is fingerprinted and the search space is expanded to find more content of interest.

We have compiled the data we collected into a dataset comprised of JSON structured files that contain information on the YouTube accounts and videos in the campaign. Since many of these accounts will be deleted and banned in the future, the dataset serves as an archive of comments, images, video thumbnails, and screenshots so they can be analyzed by researchers in the future.

Our findings show an example of a coordinated social media campaign that has consequences on the political climate and events like the upcoming 2020 U.S. presidential election.

The fact that these networks still exist and thrive on social media sites like YouTube nearly two years after they were first discovered suggests that the surface area to cover on social media sites is far too large and that automated solutions for finding and analyzing these campaigns are in need.

If you would like to learn more about our intelligence tools and software, have questions or comments on this post, or would like to contact us for another reason, please reach out to us at team@plasticity.ai.

If you are from the government or Department of Defense, please reach out to us at dod@plasticity.ai.

Special thanks to Darren Linvill at Clemson University for taking a look at the findings and the data.

Video

Comment

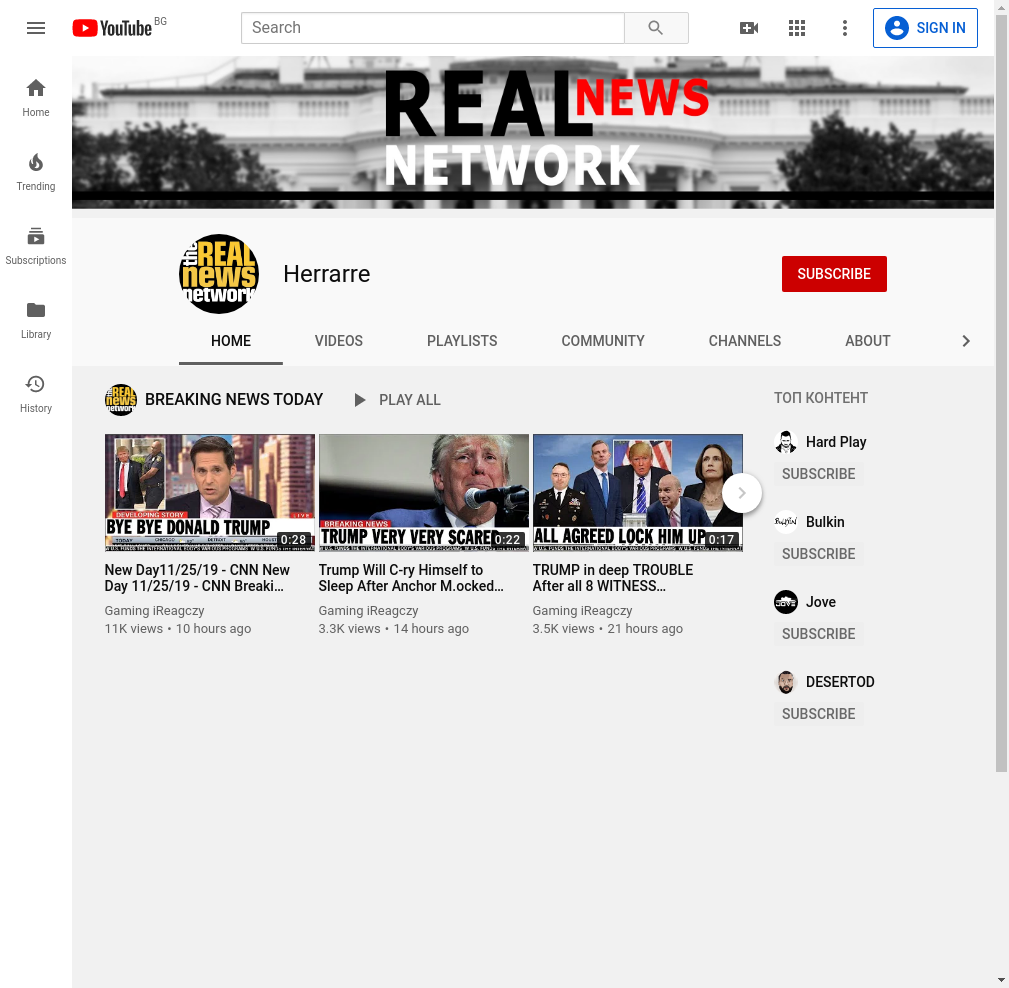

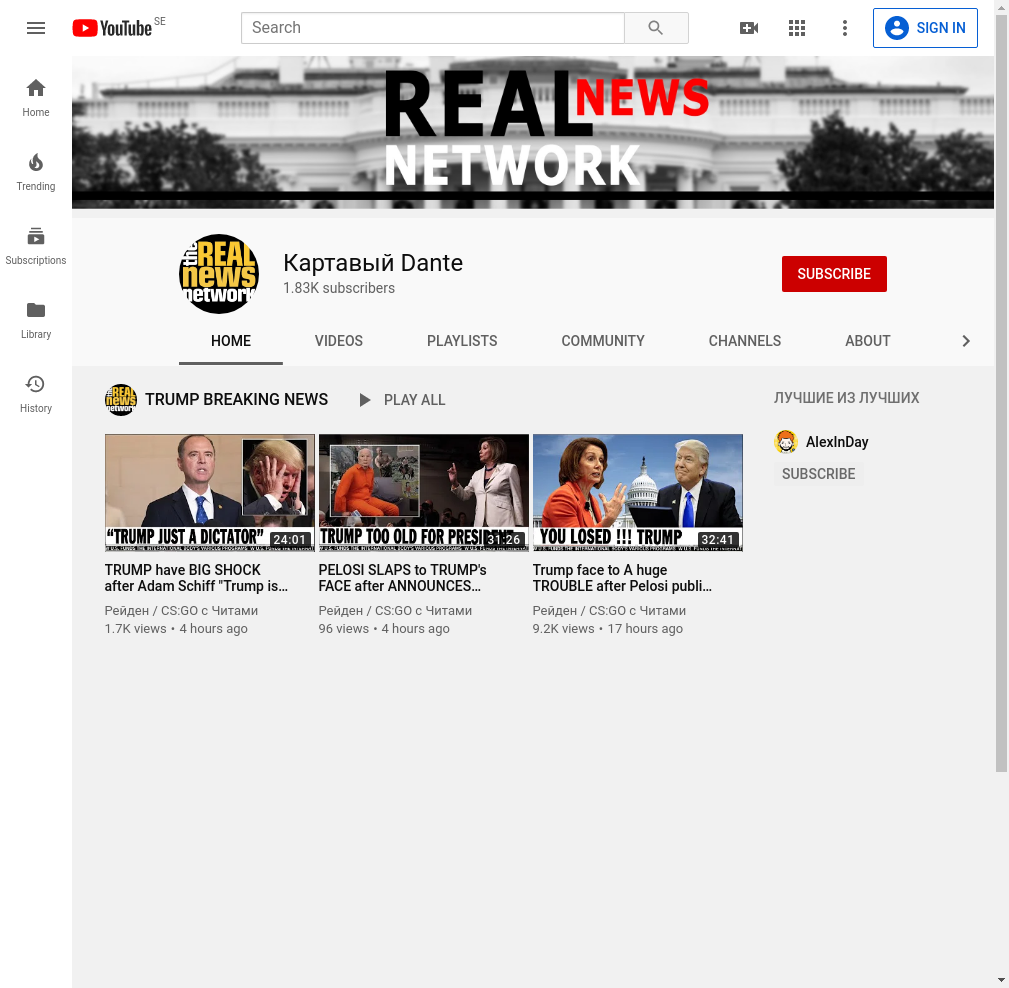

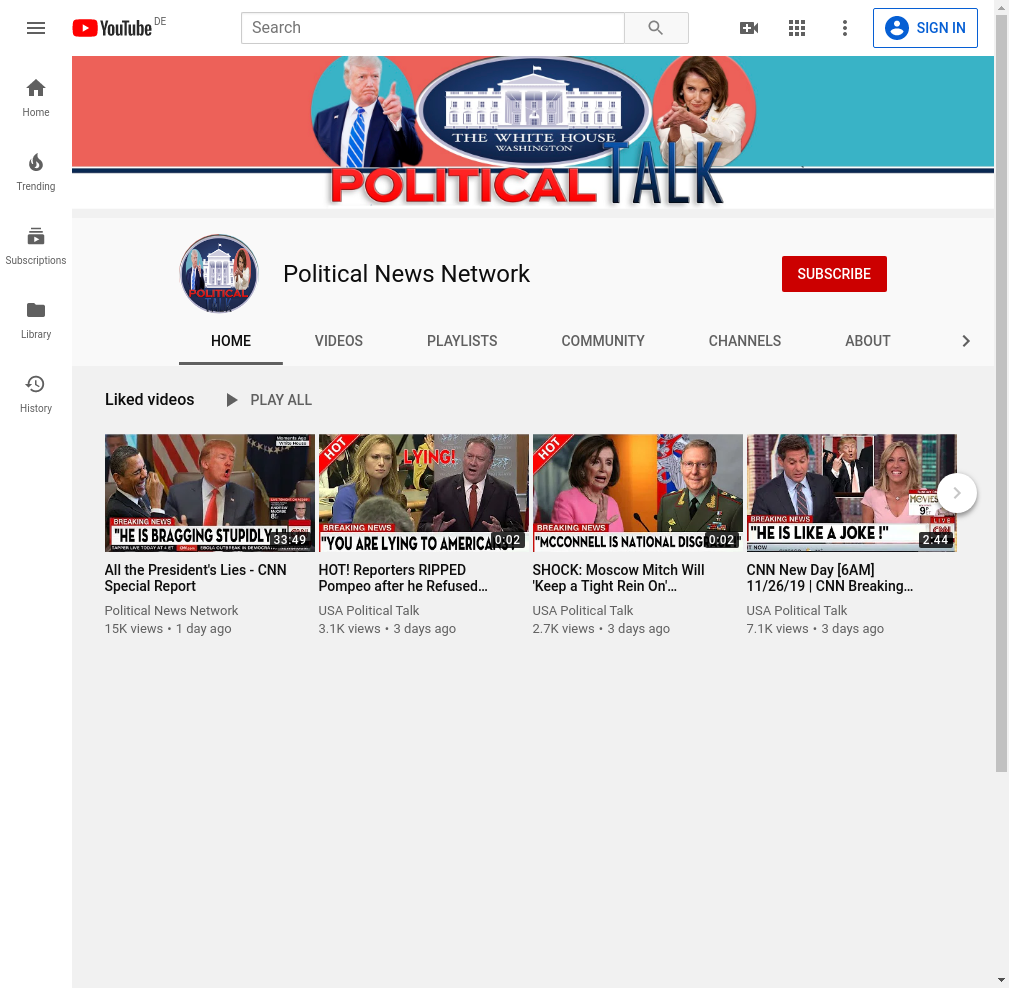

Uploaded By: Herrare

Comment By: Hibo Maxamad

Uploaded By: QTN NEWS

Comment By: Hibo Maxamad

Uploaded By: Political News Network

Comment By: Hibo Maxamad

Video

Comment

Uploaded By: US-BREAKING NEWS

Comment By: Joe Martinez

Uploaded By: US-BREAKING NEWS

Comment By: Joe Martinez

Uploaded By: US-BREAKING NEWS (2)

Comment By: Joe Martinez

Video

Comment

Uploaded By: Herrarre

Comment By: Sheik Yo Booty

Uploaded By: Gaming iReagczy

Comment By: Sheik Yo Booty

Uploaded By: Luizin

Comment By: Sheik Yo Booty

Video

Comment

Uploaded By: Herrarre

Comment By: Rafael Salas

Uploaded By: Truy Kich IT-langtu IGAME

Comment By: Rafael Salas

Uploaded By: US-BREAKING NEWS

Comment By: Rafael Salas